Amazon Web Services (AWS) has made quite a splash with the launch of the next generation of its custom-designed AI chip families — the AWS Graviton4 and AWS Trainium2. Unveiled at the AWS re:Invent event in Las Vegas, these chips deliver a leap in price performance and energy efficiency, catering to a wide array of customer workloads, including machine learning (ML) training and generative artificial intelligence (AI) applications.

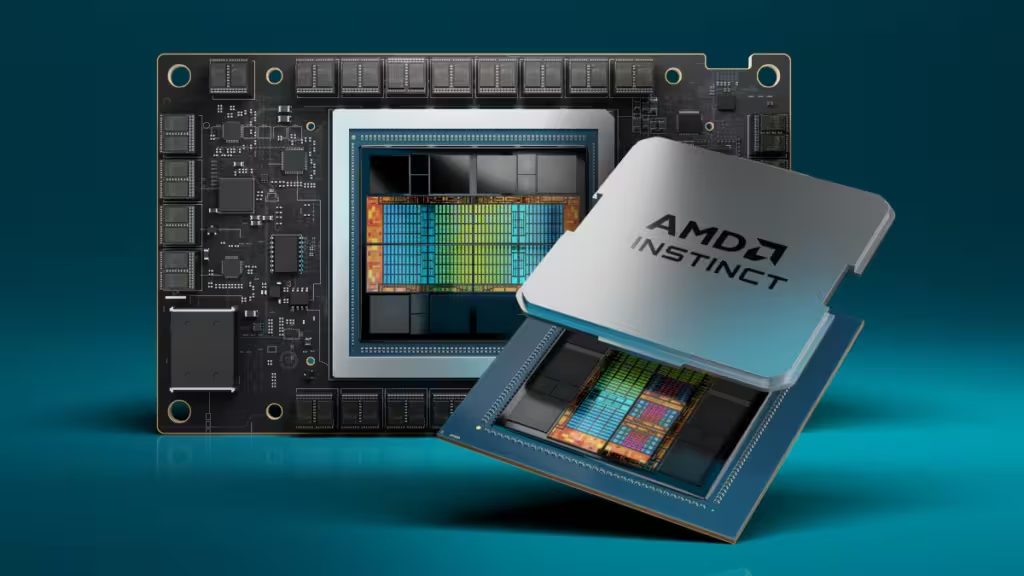

The announcement also represents a giant step forward in the company’s custom AI chip development strategy that stretches back over a decade. Innovating at the silicon level gives AWS greater control over its infrastructure and costs while reducing its dependence on other companies such as Nvidia, AMD, and Intel for critical server components. By building capabilities like security and workload optimization directly into the hardware, the company is also able to enhance performance, reduce power consumption, and offer its customers a compelling alternative to servers featuring one-size-fits-all merchant silicon platforms for specific tasks and applications.

Hailed as the most potent and energy-efficient AWS processor to date, the Graviton4 is a general-purpose SOC that enables the company to handle diverse cloud workloads using its own silicon. With 30% better compute performance, 50% more cores, and 75% more memory bandwidth than the Graviton 3, the processor increases both performance and energy efficiency across various applications running on Amazon Elastic Compute Cloud (Amazon EC2). As a result, it enables AWS to reduce overall costs and offer its customer greater flexibility in deciding which processors to run specific workloads.

The Trainium2 expedites the training of foundation models and large language models (LLMs) by up to four times faster than its predecessor. The chip is designed for deployment in EC2 UltraClusters of up to 100,000 chips, allowing training of sophisticated models in a fraction of the usual time and with double the energy efficiency. This advance is especially critical for today’s generative AI applications, which rely on massive datasets and demand scalable high-performance compute capacity.

With the unveiling of Graviton4 and Trainium2, AWS has not only strengthened the lineup of its in-house silicon platforms, but also signaled its commitment to achieving a leadership position in the data center AI processor market. Expanding cooperation with Taiwan semiconductor industry partners is critical to achieve this objective.

Long time technology industry fan here in Taiwan.