Given that many of the technical details of AMD Instinct MI300X GPU and MI300A APU had already been available beforehand, perhaps the biggest news from the yesterday’s launch event was that company executives increased their estimate of the total size of the data center AI processor market to $45 billion from $30 billion in June and expect it to reach $400 billion in 2027.

That gives AMD a huge opportunity to ramp up volumes of the MI300X and MI300A, assuming of course that the company can overcome its current supply constraints to meet demand from Meta, Microsoft, and Oracle as well as OEMs such as Dell, HPE, Lenovo, Supermicro, Asus, and Gigabyte.

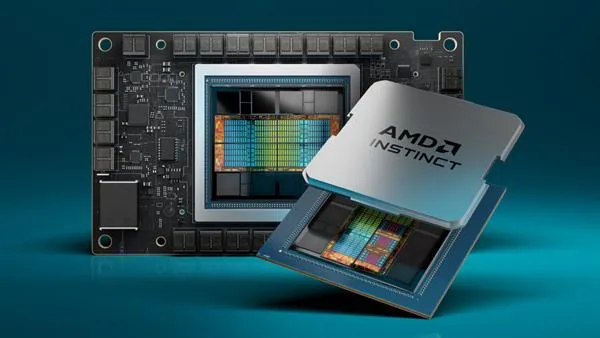

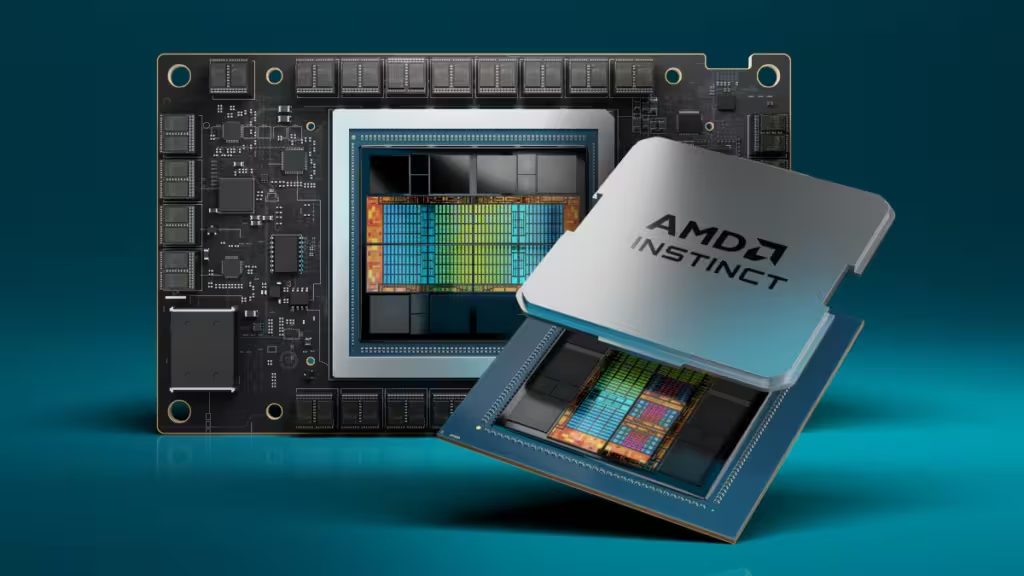

Even though the AMD Instinct MI300 Series appears very competitive from a performance standpoint judging by the benchmark numbers presented at the event, AMD has work to do on the software side to make it easier for AI developers to move their models and applications across to its new hardware.

The launch of ROCm 6.0 software stack for the latest MI300 accelerator, which includes support for various AI workloads such as Generative AI and Large Language Models, is a step in the right direction, but AMD is still a long way behind the high sophisticated developer platforms, tools, and support programs that Nvidia provides.

Bridging this gap will be essential for AMD to fully leverage the impressive advances it has made with the Instinct MI300X GPU and MI300A APU and solidify its position as a compelling alternative to Nvidia in the data center AI processor market.

Long time technology industry fan here in Taiwan.