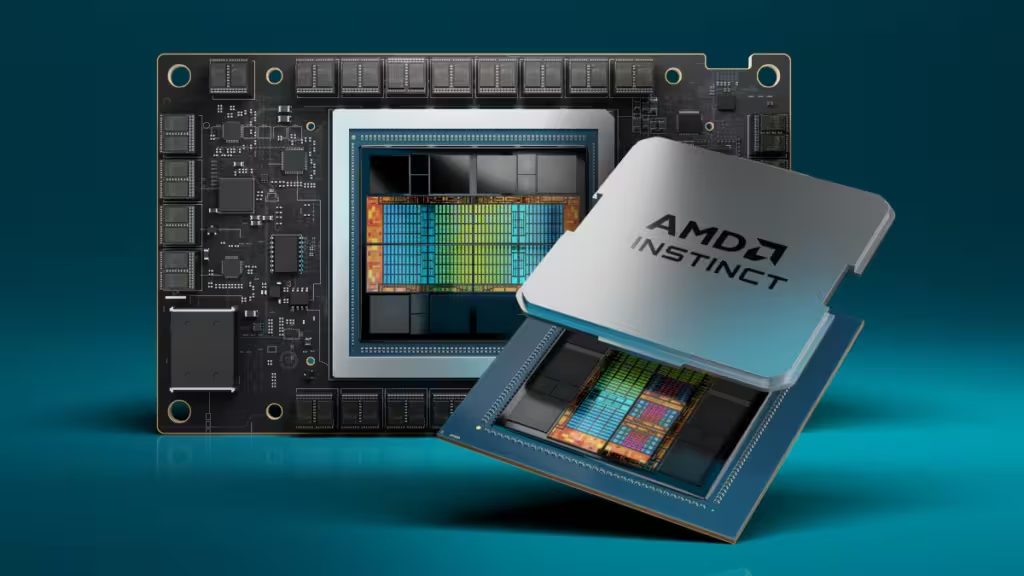

The December 6 launch of the highly anticipated AMD Instinct MI300X and MI3000A accelerators promises to add some much-needed competition for Nvidia in the AI GPU market.

The AMD Instinct MI300 series features a chiplet design and uses TSMC’s SoIC 3D inter-chip stacking and CoWoS advanced packaging technologies. The MI300X accelerator is targeted at the Nvidia Hopper GPU in the high-end AI performance segment. Combining 153 billion transistors with support for up to 192GB of HBM3 memory, it provides the computational power and memory efficiency required for large language model inference and generative AI workloads.

The MI300A APU features an integrated CPU and GPU package and is expected to be available on SXM (Server PCI Express Module) and PCI-Express modules from various ecosystem partners. Developers can optimize hardware performance using the AMD ROCm software stack, which includes a broad set of programming models, tools, compilers, libraries, and runtimes for AI and HPC solution development on AMD GPUs.

According to news reports circulating in Taiwan, the MI300 series is expected to ship roughly 300,000 to 400,000 units in 2024. Gigabyte and ASRock are said to be among the Taiwan manufacturers set to release MI300 series servers in Q1 next year.

Gadgets, gizmos, software and devices. I love all technology.